Docker's FST

a wrapper for OpenFST and PywrapFST library (2016)

Finite State Transducers

FSTs or Finite State Transducers (or Automatons) are tool one can use for creating language models. They were especially popular in the era that pre-dated deep learning, (so before not only transformers were introduced, rather RNNs). FSTs are considered to be generative language models (LMs) since their outcome contains a graphical representation of all the strings found in that language, but no worries they can handle situations in which there is little data on particular patterns in the training (via backoff, for instance). Generally, these LMs can handle better low resourced data, compared to some deep learning approaches.

A little more

Technically these repos is based on the OpenFST library implementation, Pynini implementation by Kyle Gorman, and OpenGRM implementation. The repos here may assist you when you are working on a python compiler and are interested in working with OpenFST library, Pynini, and GRM. While by the time you read it, these repos may no longer maintain the latest version, it can inspire you to set one of your own based on the recipes available in each of the docker images associated with the corresponding library. The Dockerization of those tools/libraries is aimed at preventing a messy installation and help have a smooth start when prepraring to work with them.

Repos link

The images below and their associated repos can be found in Docker repos

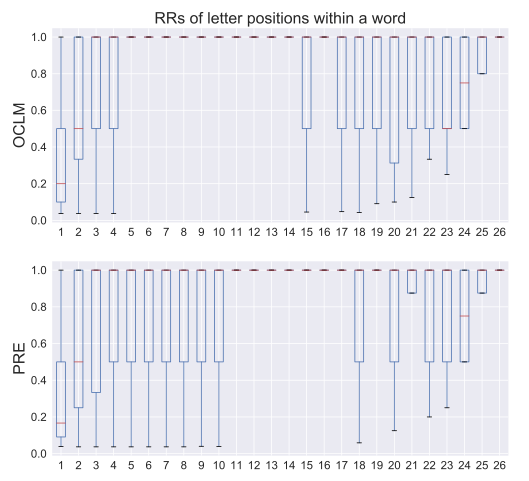

How did I use it? In the project (Dudy et al., 2018) we used the specializer to create the second baseline (PreLM).

We have this